User experience designers guide, How to document the screen reader user experience for an iOS or Android app

User experience designers are responsible for designing the user experience. Period. A visual is not enough. This doesn't ensure a good user experience (UX) for all users. Accessible design process includes documenting the non-visual UX, for screen reader users. This also helps in achieving a good user experience for other types of assistive technology users.

This guide aims to demystify an area that can often be seen as daunting. If you don’t know where to start or maybe weren’t aware that this was your responsibility, then this guide will introduce you to concepts of screen reader UX and how to document this for your component. It’s actually fairly straight forward, and just an extra step to your process. It’s ok to concentrate on the visual user experience first, you’ve got to start somewhere, though always try to keep the screen reader UX in mind.

This guide won’t necessarily teach you how to make your user experience accessible or what best practice might be for your component. This is where accessibility guidelines come into play; such as the BBC Mobile Accessibility Guidelines for web and native apps, along with platform specific guides like the iOS Human Interface Accessibility Guidelines, Android Accessibility Guide or the Material Design Accessibility Guidelines. WCAG is also a valuable resource, even though web specific many of the same principles apply. Consult guidelines as part of your process. And also consider other types of assistive technology users.

Thinking about everything at once can be overwhelming. Let’s focus on the screen reader UX. Using your visual as a starting point, this guide takes you step by step through how to document this for native app based content. For web based content see the web guide.

Don’t leave it to chance

- Knowledge - Like designers, engineers have different skill sets. Some know more about accessibility than others. This documentation will enable you to better communicate and collaborate with engineers.

- Interpretation - By looking at a visual, many engineers will be able to interpret the screen reader UX. Though their interpretation may not be the same as what you had intended.

- Creative license - Visual styling doesn’t always follow the rules. For example, as part of a component you might have a heading which has low key styling, as you didn’t want to draw attention to it. Don't assume an engineer will know that this is a heading if it doesn’t look the same as other headings.

- Consistency - You cannot assume that all engineers will interpret a visual in the same way. If you work in a large team, it’s more than likely multiple engineers will work on your component.

7 top tips

- Components not screens - Document at component level, as you would for the visual UX.

- Context - Don’t forget to consider the context of the component at the screen level. This is particularly important when considering heading and container structure.

- Consistency - Ensure you have a consistent user experience. Consistency is good for all users, both at component and screen level.

- Verbosity - Be careful not to make the screen reader UX more verbose than it needs to be. This should be of a similar level to that of the visual UX.

- Engineers are your friends - If it’s a complex component collaborate with an engineer to document the screen reader UX. Engineers may be able to suggest approaches that you may not have considered. This also makes an excellent starting point for collaboration with engineering.

- Use a screen reader - Get to know your users. Designing an iteration? Have a go using the testing steps to navigate through the existing iteration. The testing steps enable anyone to use the VoiceOver screen reader on iOS or Talkback screen reader on Android for the first time.

- Always - It’s always important to document the screen reader UX, even if it's a small simple component.

Step by step

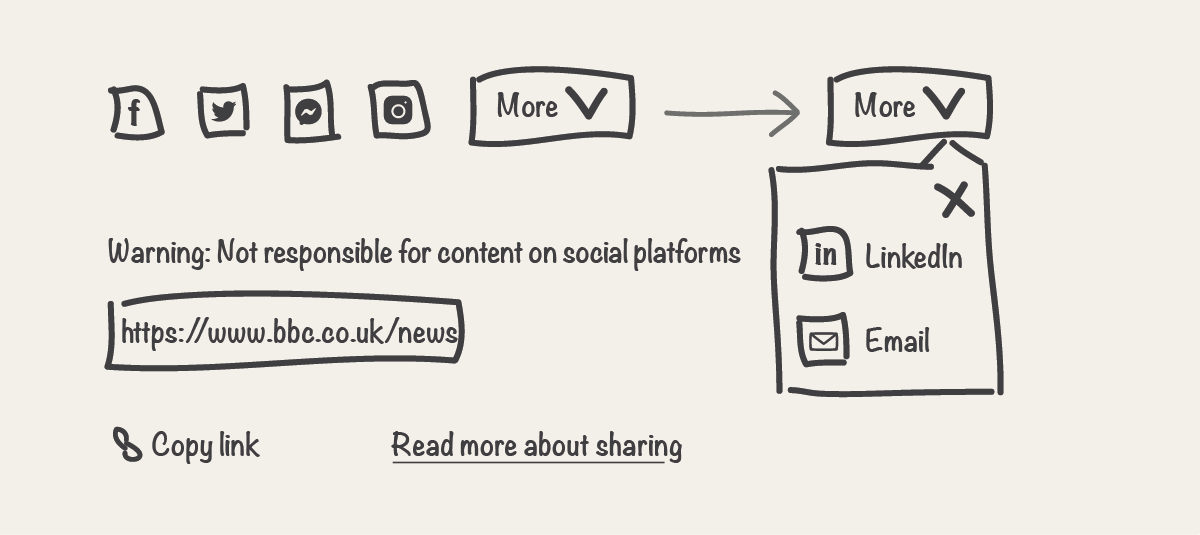

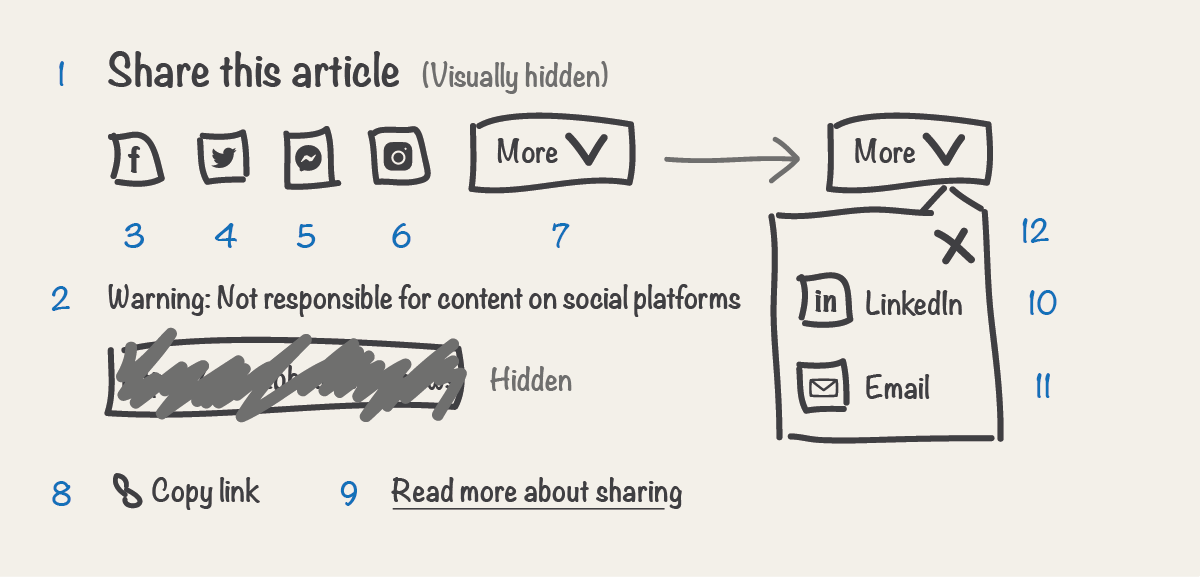

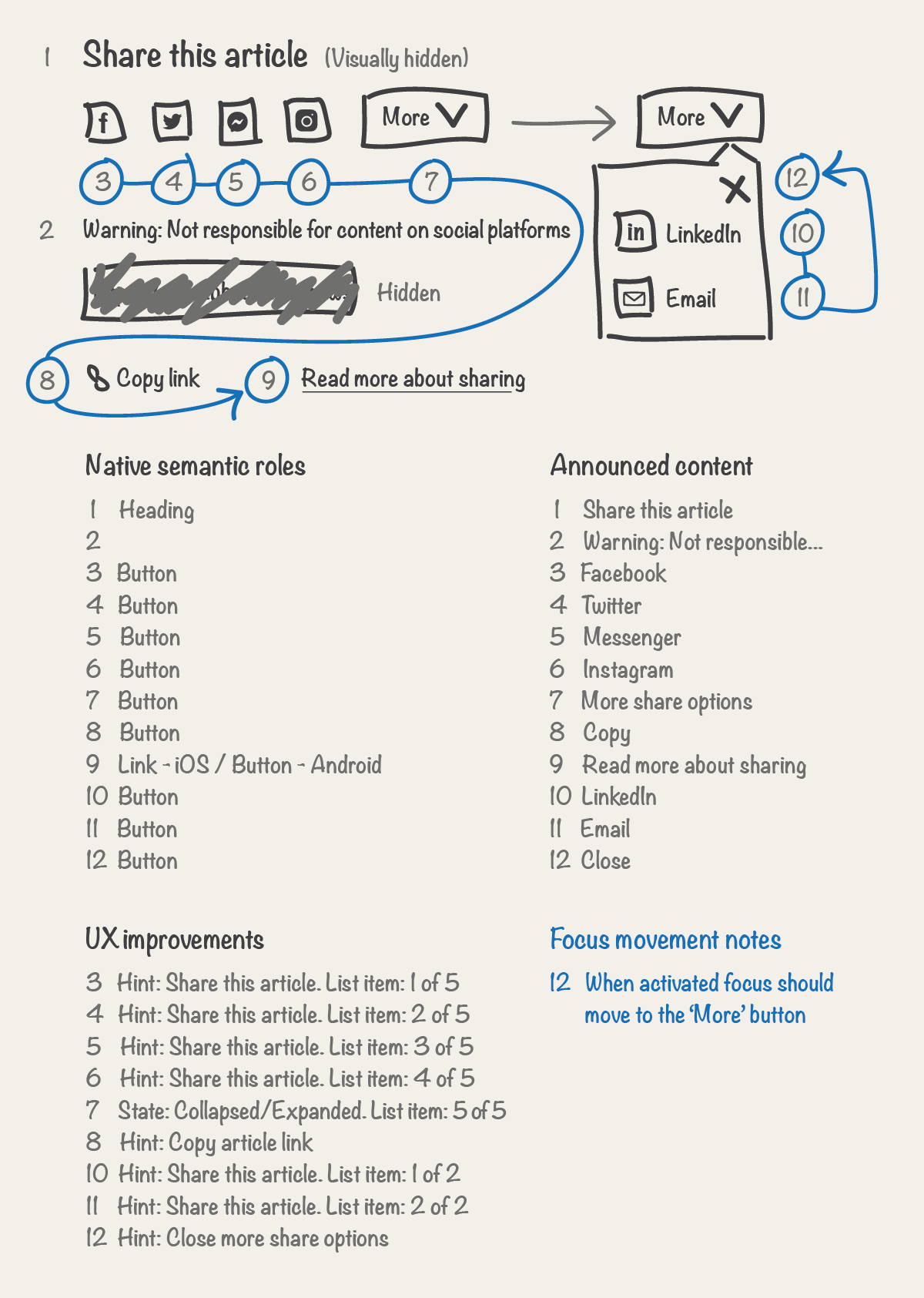

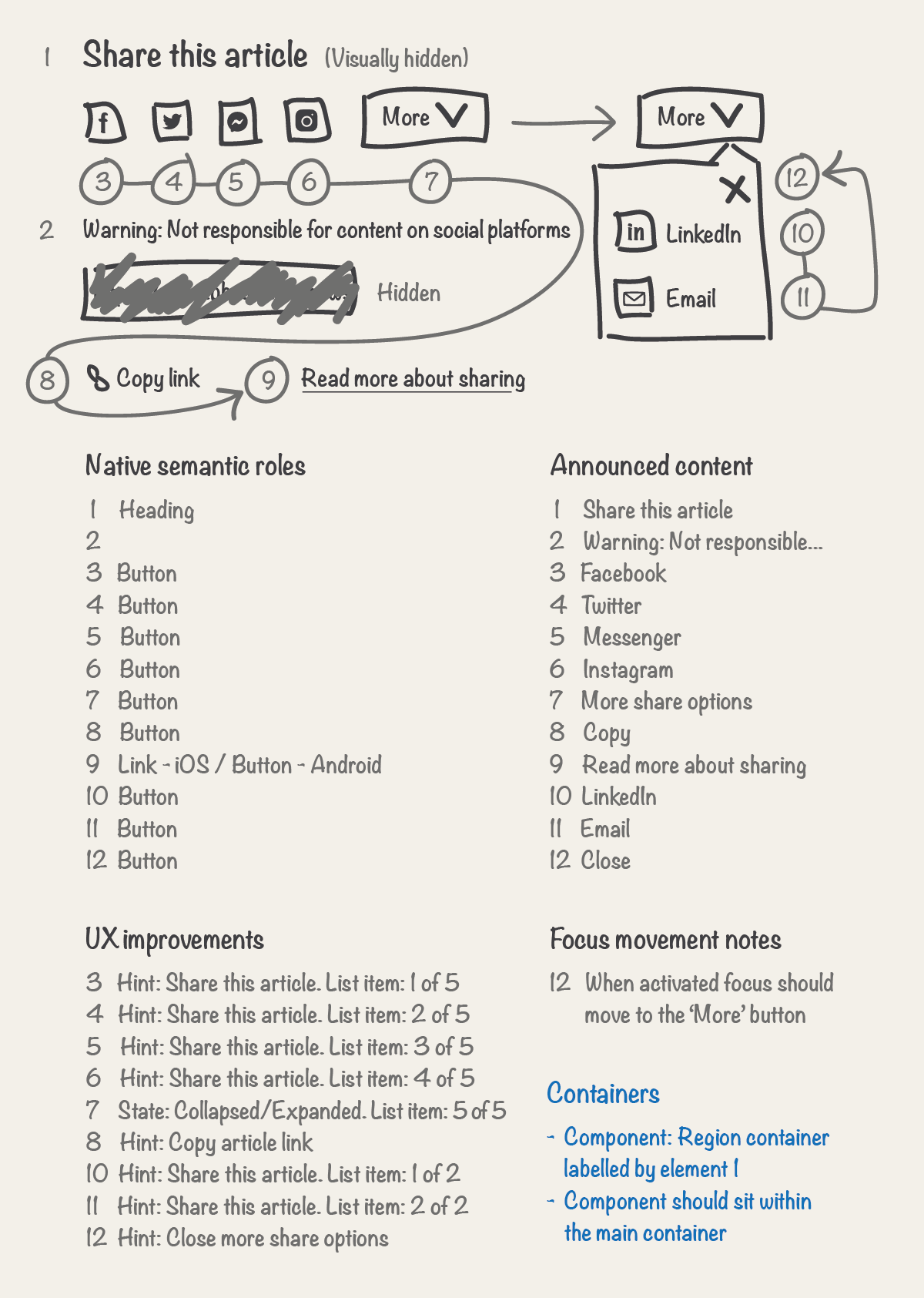

Using your visual as a starting point, we’ll take you step by step through how to document the screen reader UX introducing concepts along the way. We’ll be using a fictional ‘Share’ component as an example. In a native app best practice would be to implement sharing of content through a native UI.

Step 1 - Pencil and paper

Like a visual, you might not get this right the first time, doing this with pencil and paper will save you time. You can then document the results in a digital format afterwards.

So lets start! Grab a pencil or pen if you prefer… Then do one of the following:

- A rough sketch of the component (similar to the sketch of our example ‘Share’ component)

- Print a wireframe

- Print a visual

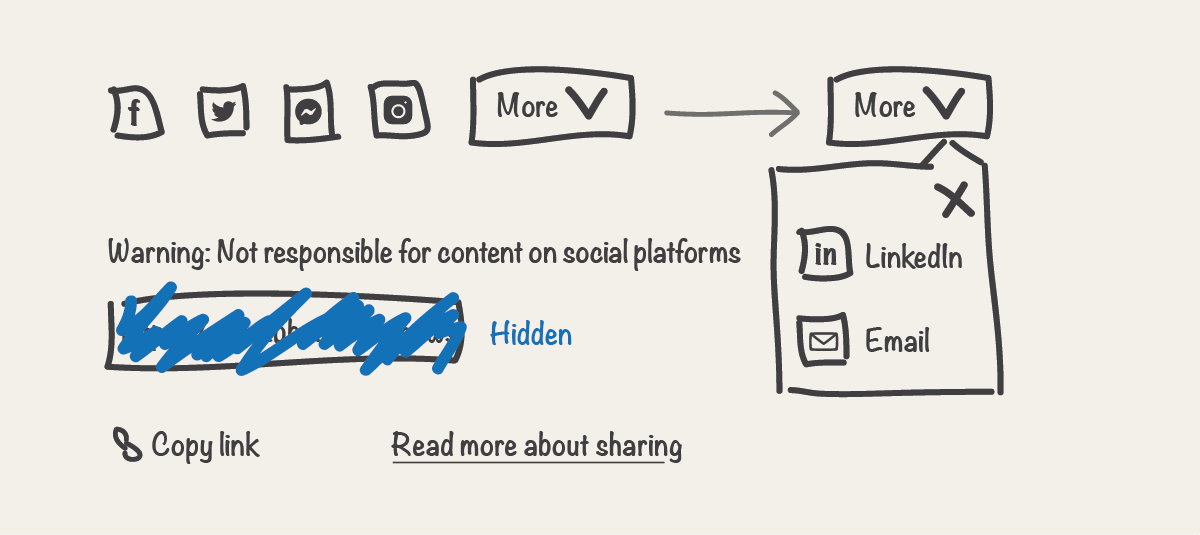

Step 2 - Cross out elements you don’t need

Are there any elements in the visual user experience that you don’t need in the screen reader UX? For example, an element that might not be relevant or make sense, or an element that doesn't add anything to the user experience, for a screen reader user.

Best practice would almost always be to have the same elements for all users. Though sometimes there may be an exception, as user needs in the visual and non-visual UX can vary, the UX should be comparable. Before crossing out an element, question why one user group would have an element and not another. Also keep in mind that you will be hiding this content from other assistive technology, such as speech recognition and reading solutions.

Cross out elements you don't need and label them as hidden elements. They will still form part of the visual user experience. In our example ‘Share’ component, the input field element containing the url of the link is for illustrative purposes, so we’ll cross this out.

Other examples of elements you might not need

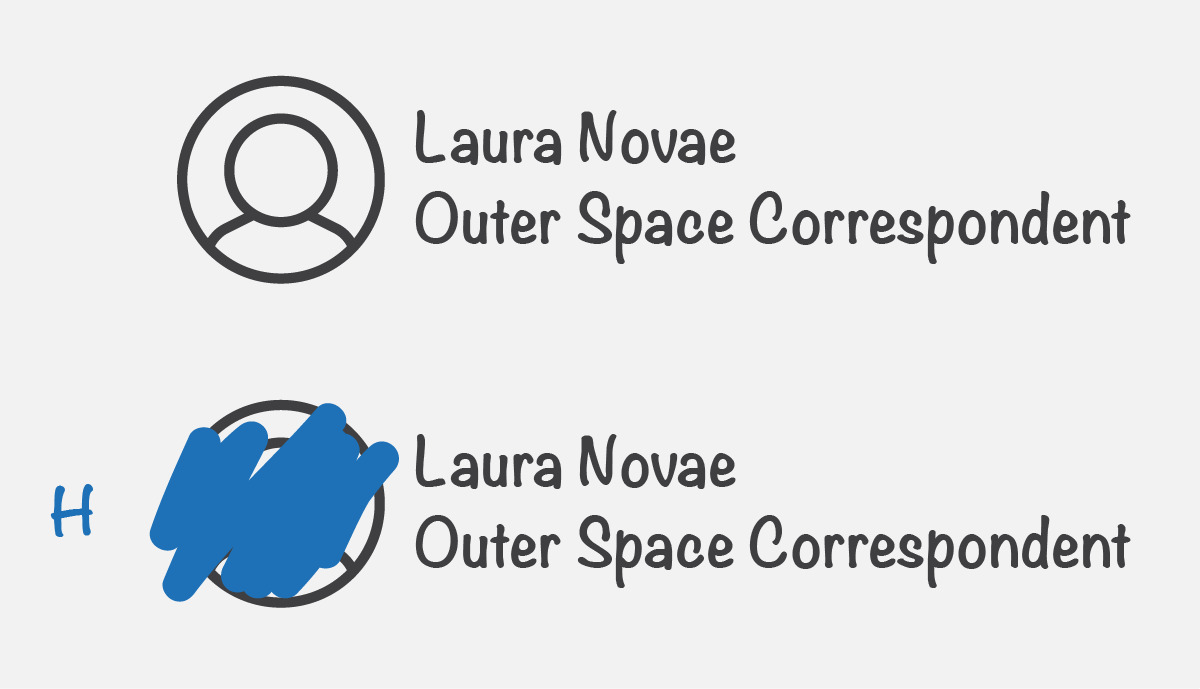

Images

Is there an decorative or presentational image which doesn’t need alternative text (alt text)? Or an image that would repeat associated text content? For example, in the case of an article byline the portrait photo might not need alt text if it would repeat the text next to it e.g. the name of the author.

Alternatives

Is there any content that you are providing an alternative for? Such as an interactive map that can't be accessible.

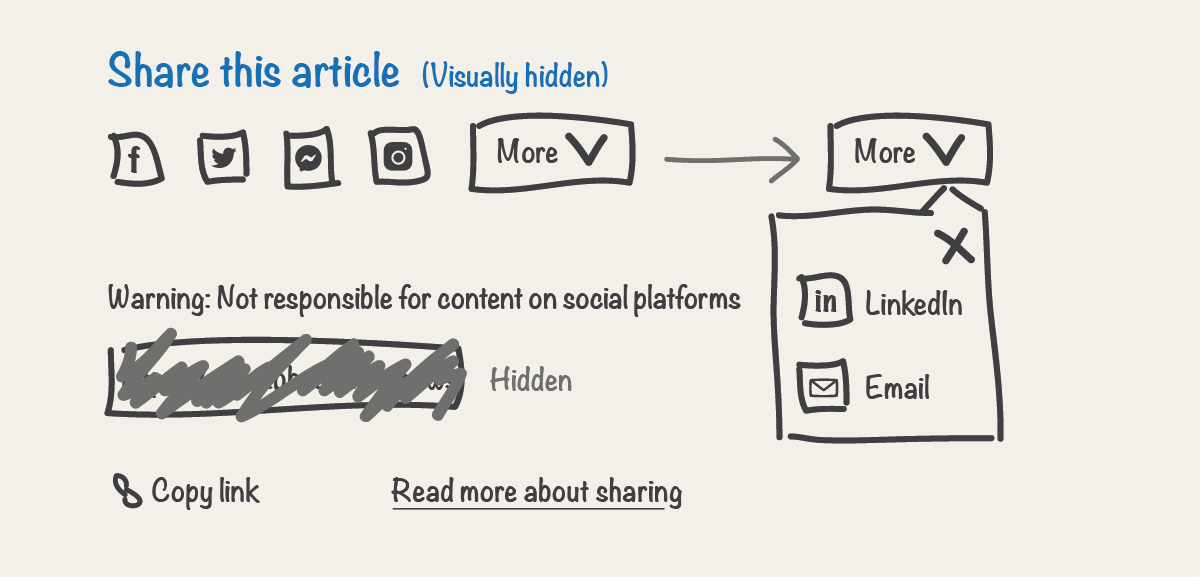

Step 3 - Add any extra elements

Would any additional elements improve the user experience for screen reader users? Any such elements will be rarely needed and should be used sparingly.

Like the previous step, best practice would almost always be to have the same elements for all users. Before adding an extra element, question why one user group would have an element and not another. Also keep in mind that it's easy to forget extra elements are there, as you can’t see them, in a future iteration these elements could be forgotten about and no longer make sense.

Draw on any extra elements, and label them as visually hidden elements, as we don’t want to add them to the visual user experience. In our example, we’ll add a heading element so screen reader users can easily identify and find this content. Don’t forget to consider the context of the component at the screen level. A heading element may not be appropriate for all ‘Share’ components.

Step 4 - Define the content order

In what order should a screen reader read through the elements? To illustrate this, add a number next to each element. Always try to make the order of elements match the visual order. A mismatch could lead to confusion for some users, such as a sighted screen reader user. It will also make building the component more complex.

On occasion, there may be times when the desired visual order just can’t give a good experience for a screen reader user. In our example, the ‘X’ close button is visually displayed on the top right as is best practise. It may not provide the best screen reader UX to have this content announced before the other share options. So on this occasion we'll change the content order for screen reader users.

Another example is information that is key to an interaction. In our example, the text “Warning: Not responsible for content on social platforms” is such a case. Inorder for a screen reader user to make an informed decision, this information should be read before the share buttons. Ideally this warning text should be displayed before the buttons in the visual too, as this would help other users as well.

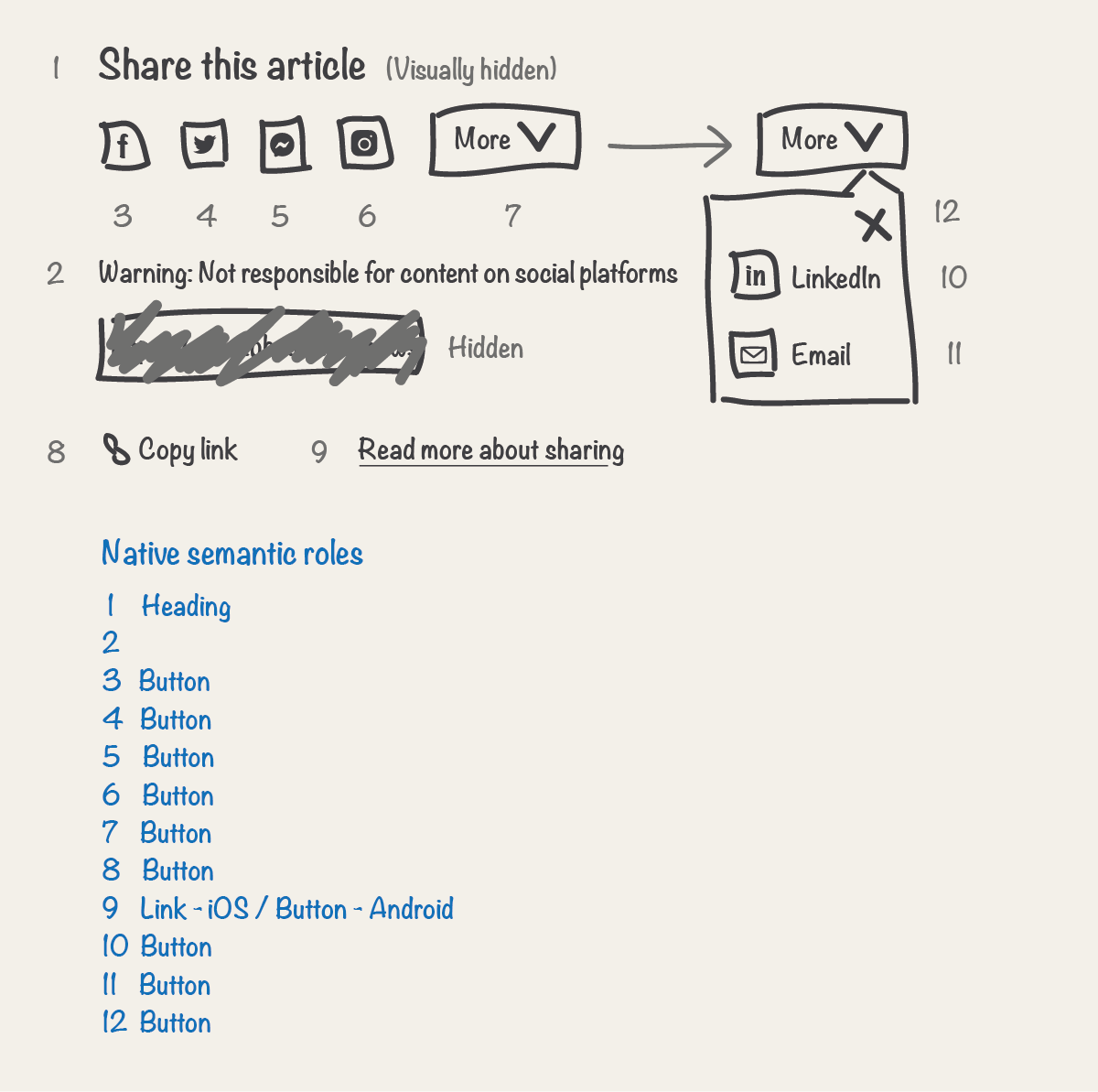

Step 5 - Define native semantic roles

In a well designed visual user experience, a sighted user should be able to identify elements and their semantic roles along with any expected behaviours by simply looking at them. For example, when viewing an article, you can usually identify text that has a semantic role of heading because the font size of it is larger than that of surrounding text. For screen reader users you need to convey this in another way, by using meaningful code. This is often referred to as semantic code.

Semantic code defines the roles of elements and relationships between elements, which then conveys the meaning and structure of content to screen reader users. This is usually conveyed to the user alongside the announced content of elements. This also tells the screen reader how elements behave or should be treated.

Some platforms have a wider range of semantic roles that you can natively apply than others. For web content there are a vast array of semantic roles that you can natively apply compared to a native app. And an iOS app currently has a greater array of semantic roles natively available, to that of an Android app.

Depending on the platform semantics are defined in the code via different methods. However, the outcome will always be the same. An element with a role of button will always be a button. You don’t need to know how to write the code, however some basic knowledge will be an advantage.

- HTML primarily defines semantic roles of elements and structure of web content.

- Traits primarily define semantic roles of elements and structure of iOS app content.

- Roles primarily define semantic roles of elements and structure of Android app content.

Think about every element in your component and the associated semantic roles for the non-visual user experience. Consider what role is the most appropriate to convey the meaning you had intended from the visual styling. Strip away the presentation styling and what are you left with? A heading, an image, a button etc.

Define native semantic roles for relevant elements along with any platform specifics. If appropriate also document any heading levels for elements with a role of heading. Standard UI elements usually come with default roles that define semantics, it is however always still important to document. In our example, we’ll define native semantic roles of heading, button and link. The role of link isn’t available for Android, so we’ll document an alternative.

Which native semantic roles are the most appropriate for app content

Native semantic roles are limited for native apps compared to that of HTML, and therefore the UX is simpler. Elements can have more than one role as per HTML, though if there isn’t an appropriate native role, don’t define anything. No semantics will be announced with this content. Not sure which roles to define? Collaborate with an engineer. You will usually only need to use a handful of native roles from the following.

| Role | Description | Semantic role announcement | Coding details |

|---|---|---|---|

| Button | Buttons are actionable elements, for both in-page interactions and those between screens within the app | Button | See button for iOS and button for Android |

| Heading | Headings divide content into sections, such as the title of a component. Consider if a heading level is also appropriate. | Heading | See header for iOS and heading for Android |

| Image | An image element | 'Image' with VoiceOver and 'Graphic' with Talkback | See image for iOS and image for Android |

| Link | Links take you out of the app to web based content. Note link semantics aren’t available for Android apps, use button instead. | Link | See link for iOS |

| Search field | A search field | Search field | See searchField for iOS and search for Android |

| Tab | Tabs display content via panels, one at a time | Tab | See UITabBar for iOS and Material tabs for Android |

For iOS the UIKit lists all roles that are available natively via traits. For Android the developer guide lists all UI roles available natively under 'look and feel'.

Heading levels

Headings aid a screen reader user’s understanding of the information hierarchy of screens. Native apps generally have a simpler UX than that of web content, and therefore a simpler heading structure with headings that provide no context or level. Though as per HTML, for iOS it is possible to specify heading levels (from 1 - 6) for headings. This decision should take into account the overall screen heading structure used throughout your app. Always use a consistent approach. Think carefully about whether you want to do this and always discuss this with an engineer.

Also take into account platform specifics:

- Navigation - Both VoiceOver on iOS and Talkback on Android give users the ability to navigate via headings. Though they do not give users the ability to navigate via heading level like a desktop screen reader does for web content.

- Android - The Android platform does not currently provide a way to natively code heading levels.

More information on semantics

Lists and tables

Native semantic roles are limited for iOS and Android apps, compared to that of the web. Some common semantic roles that you might have expected to see listed here, such as those for lists and tables cannot be defined via native roles, for native app content, as they can be via HTML for web content. Unless predefined as part of a standard UI element. Any additional desired semantic roles should be documented under UX improvements. Always consider carefully if any such semantics are appropriate for app content.

Link vs button

It’s important to use the correct semantic role for the job. Links and buttons have different behaviours which have well established user expectations. Such as being able to activate a button by pressing the spacebar on a keyboard. Use a button for in-app interactions, for both in-page interactions and those between screens. Use a link if you will be taken out of the app to web content upon activation. Note link semantics aren’t available for native Android apps, in this case use a button.

Perceived affordance

Semantic roles communicate the meaning of content to screen reader users. They also communicate expectations. For example when an element is interacted with, such as link vs button. Expectations aren't only important for screen reader users. Visual styling gives sighted users an expectation and a perceived affordance. Visual styling should communicate the semantic meaning of elements. For example, interactive elements should look like interactive elements. If you don't have perceived affordance this may cause issues for some users. For example, those using speech recognition assistive technology, such as VoiceControl on iOS, rely on being able to visually interpret meaning and behaviour to know what they can interact with and how, via speech.

UI elements

Web content is built with HTML elements. In native apps the content is built with UI elements, these might also be referred to as UI components or UI building blocks, or specifically on Android also as UI widgets. We’ll refer to them collectively as UI elements here. Semantic roles are then applied to UI elements to give them meaning. Standard UI elements usually come with predefined semantic roles out of the box. Custom UI elements however, do not come with any predefined semantic roles. Always try to use standard UI elements if possible.

The following standard UI elements are available:

- Material Design - Material supports best practices of user interface design and includes a set of UI component building blocks composed of UI elements for use by both the iOS and Android platforms.

- iOS - For iOS the Human Interface Guidelines list all the available UI building blocks composed of UI elements, and individual UI elements you can use by default. Grouped under bars, views and controls.

- Android - For Android specifics see Android UI look and feel.

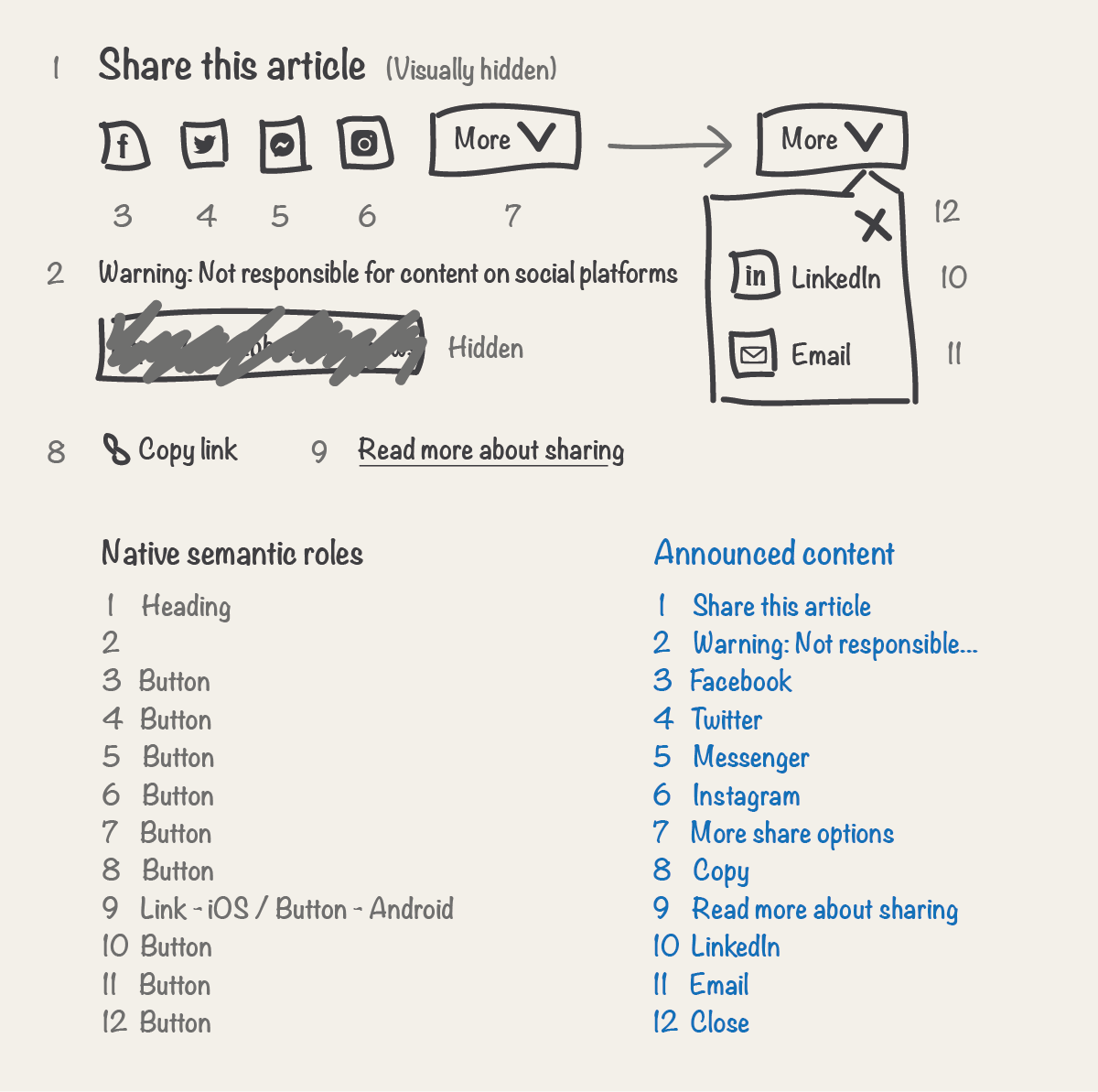

Step 6 - Define the announced content

When a screen reader encounters an element with a semantic role, meaning is usually conveyed. The screen reader will also announce the content of the element. For example, when a screen reader encounters an element with a semantic role of heading and content of ‘Screen reader UX’. The combined announcement of semantic role and content would result in ‘heading, Screen reader UX’ or ‘Screen reader UX, heading’ depending on the screen reader. Like web browsers, screen readers can behave slightly differently.

For a comparable experience to that of the visual, you should document the content or text that should be announced for each element. This should include images and icons, in such cases this is often referred to as a textual alternative or alternative text. You have already documented semantics by defining native semantic roles.

You might want to announce extra text content to that of the visual. For example, to provide context, which might be obvious or not relevant in the visual user experience. Extra text won't form part of the visual user experience. An engineer can decide what is the most appropriate way to implement this, usually via an accessibility label for iOS or the content description for Android. Use extra text sparingly and only when absolutely necessary.

Document what content should be announced for each element. In our example, in addition to the text in the visual, we’ll also document extra text for the 'More' button to provide context. Along with the announced content for icons and images.

Other considerations for extra text

- Development - Extra text can add complexity to the code which might mean it’s harder to maintain.

- Editorial - Editorial may not be aware of extra text content. And in some cases be able to add content that doesn’t make sense combined with this.

- Future iterations - It's easy to forget that extra text content is there, as you can’t see it. In a future iteration this information could be forgotten about and no longer make sense.

- Complexity - Extra text could cause confusion and add to complexity for some users. For example a sighted screen reader user could see one thing but hear another.

- Localisation - Extra text may need to be translated into many different languages.

Other examples of announced content

Context

In our example, there’s a button with text of ‘More’. We could just announce this content. However, a screen reader user may need extra information to be sure what they’re going to be shown more of. So we’ll provide some by announcing ‘More share options’. This is especially useful when elements are navigated to out of context. Ideally you should also visually display this text, as this would be useful for everyone.

Another approach could be to provide context via a hint. This would result in a combined announcement of content, semantic role, and hint of ‘More. Button. More share options’. This isn’t the preferred UX in this case, as we can further improve the UX of the button by providing an affordance for the state of the button. Providing context via a hint in addition to announcing state (such as ‘More. Button. More share options. Expanded’) could result in an overly verbose and unclear UX.

Icons

If an icon is key to understanding, it will need some text content announcing for it, that communicates its meaning and purpose. This is referred to as a textual alternative. For example, if an icon, such as a video play button, doesn’t have any associated text that conveys its meaning and purpose, a textual alternative would be needed for a screen reader user. In our example, the ‘X’ close button would be such a case. We could just announce ‘Close’, though this doesn’t give any context. Context could be provided by announcing ‘Close more share options’. However, the preferred UX in this case is to provide context later via a hint. This will result in the semantic role being announced before the context which will aid browsing of content. Such as ‘Close. Button. Close more share options’.

Images

The announced content for an image is referred to as alternative text or text alternative. This should describe the image.

Key information

Try to provide key information first for text alternatives. This will aid browsing of content so users don't have to always listen to the full text. In our example, the function of button elements 3 to 6 is to share an article. To uniquely describe the target of each button, we also need to announce the social platform. The social platform distinguishes this from the other share buttons so we'll announce that first. We can then announce the function or context afterwards. For example 'Facebook, share this article'. Though the preferred UX in this case is to provide context later via a hint. This will result in the semantic role being announced before the context, aiding browsing further. Such as ‘Facebook. Button. Share this article’.

Abbreviations

Commonly known and spoken

Abbreviations which are commonly known and spoken do not need unabbreviated announced content. For example GMT is an abbreviation for Greenwich Mean Time. The abbreviation of this is commonly spoken.

Commonly known but not spoken

Abbreviations which are commonly known but not usually spoken, should have unabbreviated announced content. Or preferably be unabbreviated as this would be helpful for other users as well, such as those with a learning impairment. For example the date ‘Thursday 18th December’ is commonly abbreviated to ‘Thur 18th Dec’. Though you would not say ‘Thur’ or ‘Dec’ even if it was written like that. Another example would be an abbreviated timestamp of ‘1h’, this is an abbreviation for ‘1 hour ago’. Providing both abbreviated and unabbreviated content is also a less than optimal code solution.

Not commonly known

Abbreviations which are not commonly known should have unabbreviated announced content. Or preferably be unabbreviated as this would be helpful for all users.

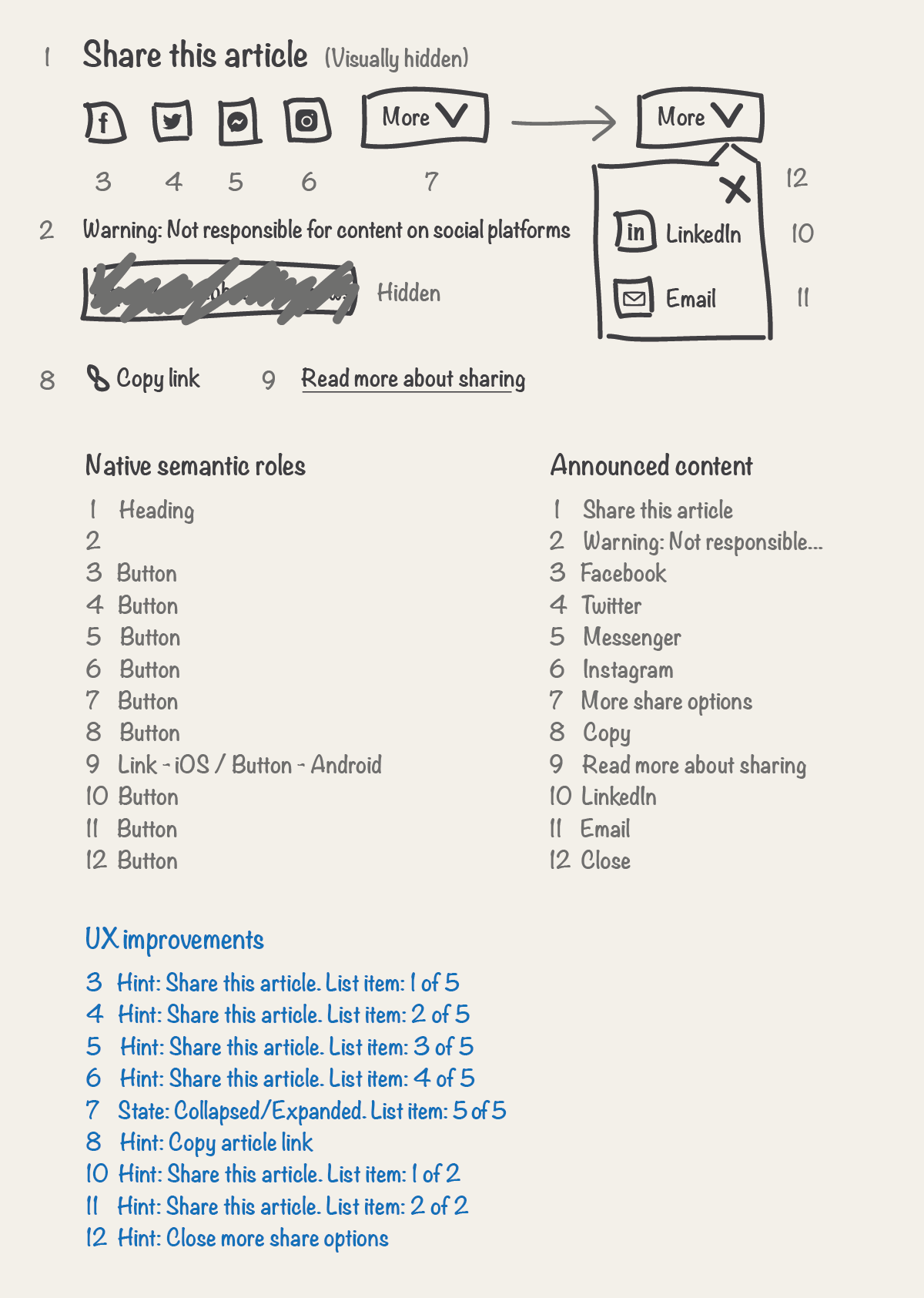

Step 7 - Add any UX improvements

Improvements should be called upon sparingly. They are particularly relevant for in-screen interactions and dynamic content. In our example, the 'More' button has a downwards arrow icon. This is a visual clue for sighted users. Giving a hint to the behaviour of the button upon activation, this is also known as an affordance. Could the non-visual UX be improved by providing a comparable affordance for the button behaviour?

Consider every element and document any desired UX improvements. Consider UX improvements such as additional semantic roles and hints. Also document states for relevant actionable elements, changes to language and any additional functionality that would improve the UX. In our example, for the share buttons, we’ll provide context with a hint. We can also improve the UX with additional list semantics as the share buttons form a collection of related items. The combined announcement of content, semantic roles and hint for the first share button will now be ‘Facebook. Button. Share this article. 1 of 5’.

Additional semantic roles

If appropriate provide additional semantic roles that would improve the user experience, that could not be defined by native semantic roles. Use any additional semantic roles sparingly. Remember the UX of native apps is often simpler than that of web content. Carefully consider if any additional semantics are appropriate.

- Which additional semantic roles should I consider? This may be particularly relevant for list, table and adjustable content. Unless part of a standard UI component, these will not be provided by default. Document any additional semantics that would improve the UX.

- List - A collection of related items. This is conveyed by telling the user how far they are through a collection of related items and how many items there are. For example, in a list with 5 items, list semantics for the first item would be announced as ‘1of 5’. List semantics cannot be defined via native roles for native app content, as they can be via HTML for web content, unless predefined as part of a standard UI component, such as a tabs. Document any additional list semantics that would improve the UX.

- Table - Table semantics cannot be defined via native roles for native app content, as they can be via HTML for web content, unless predefined as part of a standard UI component. Document any additional table semantics that would improve the UX.

- Adjustable - An element that can be adjusted by the user through a range of values, such as a slider used to change the volume or provide a star rating. For coding details see adjustable for iOS and slider for Android.

- Tab - If your component includes tabs as part of a standard UI component, list semantics will usually be provided by default. It is however always still important to document.

- Not needed often - Additional semantic roles will not be needed often. The W3C has a list of semantic roles that can be used for structure in web based content, even though web specific many of the same roles could be considered for use. Also see widget roles. For a full list see definitions of roles.

Hints

Hints can be used to provide context and help users understand what will happen when they perform an action on an element. Hints should be brief and have a clear purpose. In our example, we’ll provide a hint of ‘Close more share options’ to provide context for the ‘Close’ button and help the user understand what will happen upon activation. This will result in a combined announcement of content, semantic role, and hint on iOS of ‘Close. Button. Close more share options’, and on Android of ‘Close. Close more share options. Button’.

- Be succinct - Hints should be brief and have a clear purpose.

- Consistency - Always use a consistent approach for hints throughout your component and app.

- iOS - On iOS, hints are announced after any native semantic roles. In the code, this will be achieved with an accessibility hint.

- Android - On Android, hints are announced before any native semantic roles. In the code, this will be achieved by adding information to the end of the content description.

- Voiceover - Hints can be turned off in VoiceOver by the user, so that they aren’t announced.

- State - If both announcement of state and a hint giving context is desired for an element, consider if it would be better to provide context via the announced content rather than via a hint. Providing context via a hint in addition to announcing state could result in an overly verbose and unclear UX.

States

States should be provided to improve the UX of relevant actionable elements, such as those of buttons and checkboxes. In our example, the ‘More’ button is such an example. Providing an expanded or collapsed state affordance would improve the UX. This will result in a combined announcement of content, semantic role and state. When in a collapsed state on iOS of ‘More share options. Button. Collapsed’ and when expanded ‘More share options. Button. Expanded’. And on Android when collapsed of ‘More share options. Collapsed. Button’ and when expanded ‘More share options. Expanded. Button’.

You will usually only need to use a handful of states from the following:

- Checked - Indicates the state of checkboxes and radio buttons.

- Collapsed - Indicates content is currently collapsed.

- Disabled - Indicates that an element is disabled.

- Expanded - Indicates content is currently expanded.

- Pressed - Indicates the state of a toggle button.

- Selected - Indicates that an element is currently selected, such as a tab. If your component includes tabs as part of a standard UI element, selected states will usually be provided by default, still document this.

The W3C has a list of ARIA states that can be applied to HTML elements for web based content, even though web specific many of the same states could be considered for use. For native app specifics see:

- Material Design - Material supports best practices of user interface design and includes a set of UI component building blocks composed of UI elements for use by both the iOS and Android platforms.

- iOS - For iOS the Human Interface Guidelines list all the available UI building blocks composed of UI elements, and individual UI elements you can use by default. Grouped under bars, views and controls.

- Android - For Android specifics see Android UI look and feel.

Language

Note any changes in language to content, this will aid a screen reader in announcing the content with the appropriate accent.

Additional functionality

If appropriate, also provide additional functionality that could improve the screen reader UX, such as a live region for dynamic content:

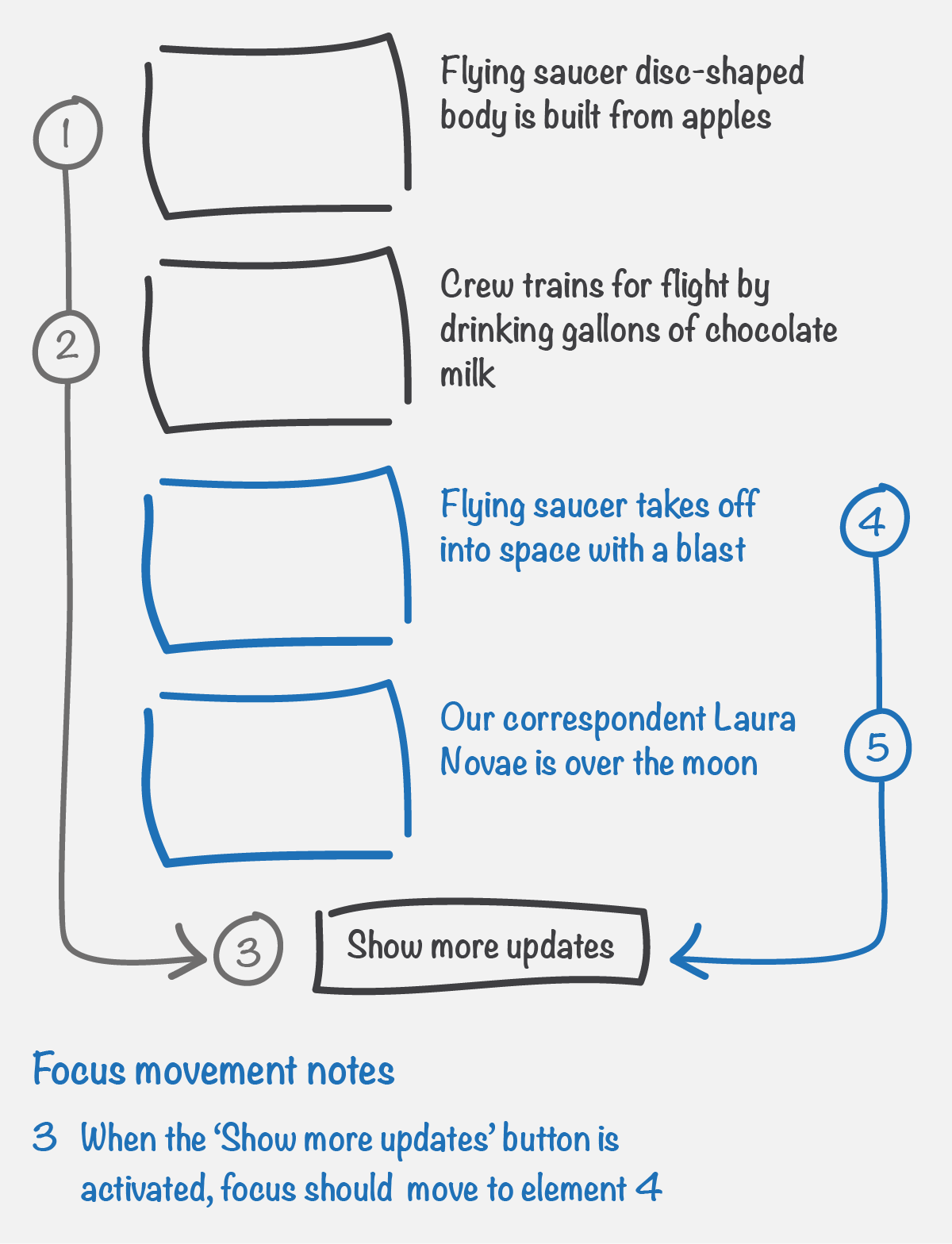

- Notify users of new or updated content without interrupting the user's current task - For example, in the case of a news update being added to a page or screen. Without a notification screen reader users may be unaware of this. Notify the user of the new content when they have finished their current task.

- Notify users of new or updated content immediately - For example, in the case of an error message being added to a screen. Without a notification screen reader users may be unaware of this. Notify the user of the new content immediately (this behavior may not be desired for all error messages).

Standard and custom UI elements

Standard UI elements

Standard UI elements may include some default UX improvements. It is always still important to document. Not sure what the default UX improvements are for a UI element, ask an engineer for an example on the relevant platforms. If you can improve the user experience then do so. Keep in mind that standard UI elements are used widely across the app ecosystem and users will thank you for consistency.

Custom UI elements

Custom UI elements do not come with any predefined UX improvements, be sure to fully consider any such elements.

Step 8 - Define focus order and behaviour

All actionable elements, such as links and buttons, are by default focusable with a screen reader and other assistive technologies such as switch devices. The order in which these elements can be focused and interacted with, for example with a keyboard by pressing the ‘TAB’ key, is important to consider. This order is referred to as the focus order or sometimes the tab order. All focusable elements should be visually displayed when they receive focus, and this order should follow a logical sequence. This is usually expected visually from left to right as you move through elements and down a screen. Though there will be some exceptions to this. This order is by default defined by the content order.

Define the focus order by adding a directional line between actionable elements. Also document when focus needs to be moved on the users behalf after an in-screen interaction. In our example, in addition to defining the focus order, we’ll also add some notes on focus movement for the 'Close' button upon activation.

Moving focus on the users behalf

It’s important to consider when focus needs to be moved on the users behalf after an in-screen interaction. Interactions should follow a logical sequence and provide a good user experience. For example, if a ‘Show more updates’ button is activated and new content is inserted above the button, by default focus would remain on the button after activation, this would be a frustrating experience for assistive technology users, who would then have to identify the start of the new content and navigate to it. In this example, to improve the UX, focus should be moved on the users behalf to the new content upon activation of the button.

Step 9 - Define any containers

Typically we use colour or other styling to identify areas of screens or pages visually. On the web for example, the BBC News website has a red block at the top. Visually this groups and identifies the page banner content. In the non-visual experience, you need another way to do this for both the web and native apps.

Landmarks provide a way for screen readers to identify areas of web pages, containers are the equivalent in a natvie app. This enables users to identify and navigate to areas of a screen. Note navigation is not currently possible via containers with Talkback on Android.

Note, landmarks and containers are different to headings. Use the ‘show landmarks’ and ‘show headings’ buttons at the top of the ARIA landmarks examples page to see how. The same principles apply for containers to that of landmarks, which are the native app equivalent.

If appropriate document any containers and associated labels for your component. Also make a note of any containers your component should sit within if relevant. For web content there are 8 landmark roles that you can use. Think along similar lines for a native app. Use the steps in the General Principles of Landmark Design to decide if a container is appropriate for your component and/or part of it. This won't always be necessary. In our example, we’ll add a fictional region landmark.

Step 10 - Validate the experience

Validate the screen reader user experience by describing this to the reviewer as part of an accessibility design review.

Back to page contentsNext steps

When the screen reader UX has been documented an accessibility design review can take place. The next step in achieving an accessible component is writing accessibility acceptance criteria. For which, this documentation along with your visual will form a basis for. Your component is then ready for the development phase.

Back to page contentsUX review

When the component has been developed and is ready for UX review, an accessibility swarm is your chance to review and sign off the screen reader UX. Designers are responsible for reviewing the experience for all users, not just the visual UX. Use this documentation to review against.

Note, this guide takes one approach, there are other ways you could do this. Back to top