How good really? (it’s complicated)

By Alexandra Deschamps-Sonsino

One of the outputs of this project is a 72 questions to help companies reflect on the digital product they have built or are building. Some of these questions relate to the product as it is, but others relate to how the company operates.

Depending on how you answer questions about the product, this might highlight problems you might encounter with regulators, advertising standards agency, consumer rights and digital rights bodies.

Depending on how you answer questions about your internal process, worker rights and health and safety measures might be affected.

So how would you feed that back to someone building an app, a game or a piece of media? Building a rating scale and giving them a score feels like an easy solution. Put all this on a Typeform and let people click through and get a Low/Medium/High rating at the end. Job done. Not quite.

I worked on a community-led questionnaire for #iot startups called Better IoT and on a sustainable finance product in the banking sector in the past and rating scales are complicated.

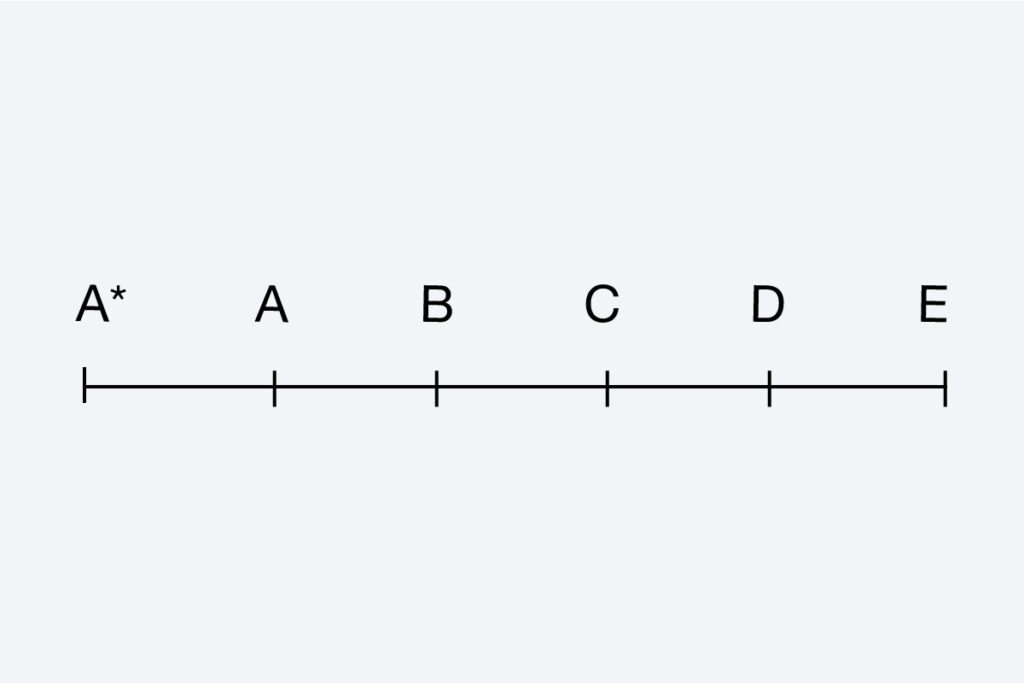

Unlike A levels, where scoring and success is clearly defined by all stakeholders, scoring the way a product supports human values across all 14 values is hard. Is every question important in relation to every other question? Is every question easy to act on if a change is required? How do the values act in relation to one another? Are other kinds of questions possible beyond the ones we chose? Do they all apply for every single type of digital output? Trying to answer these questions is what can influence the design of a scaled score.

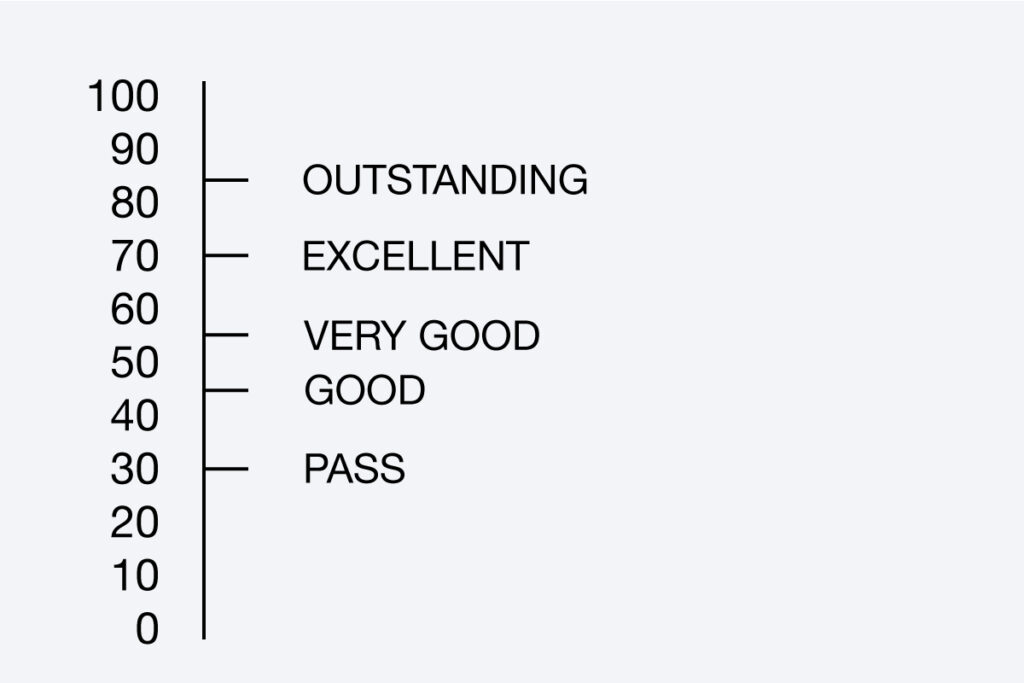

Scores can either reward change or ‘business as usual’. This might be done when the words used to score hide how bad things are if you relied on a number.

A silver medal in last week’s Tokyo Olympics is amazing but a Silver rating for a building according to the Hong Kong based BEAM green building certification scheme corresponds to anything between 55 & 65%. And the UK’s BREEAM calls a new building ‘Very Good’ if it scores between 55-69%. I’m not sure my A-levels profs would have agreed with this assessment.

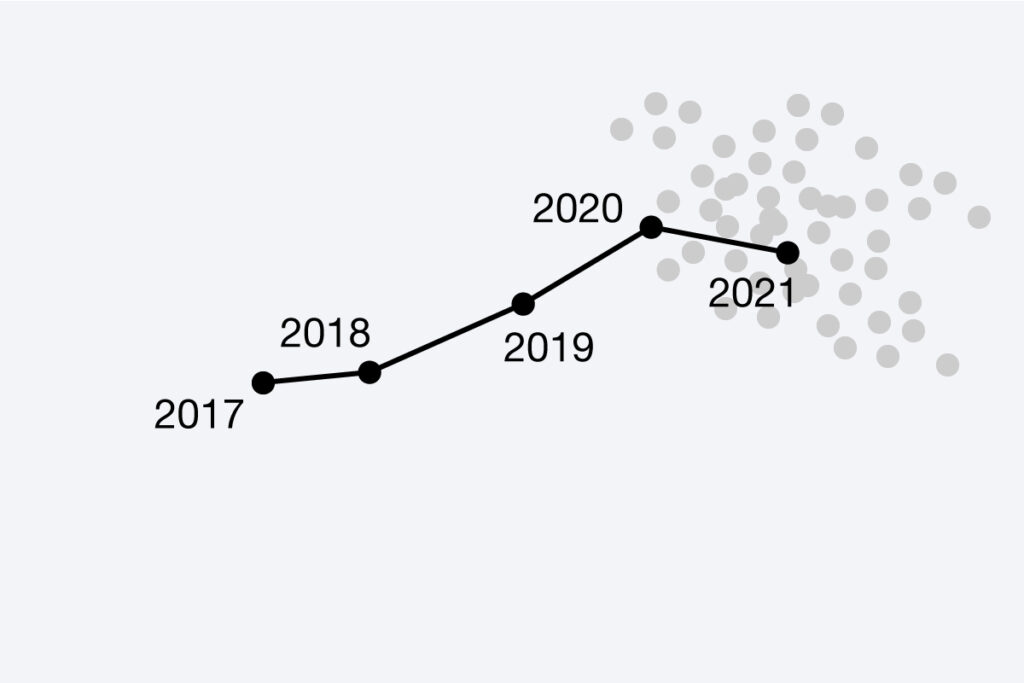

Another way to show you how ‘good’ you are is to compare you to others. This is quite popular in the ESG (environmental, social, corporate governance) world. Some ESG ratings might ask companies to pay to fill in a long 60+ questionnaire every year and they are offered a report which only compares them to their peer group. The more people fill in that assessment, the more useful that comparison but noone wants to pay to look like they’re at the back of the queue. Some ESG ratings are publicly disclosed by default (like the Carbon Disclosure Project) but most aren’t. And a point cloud visualisation hides where the areas of improvement really lie.

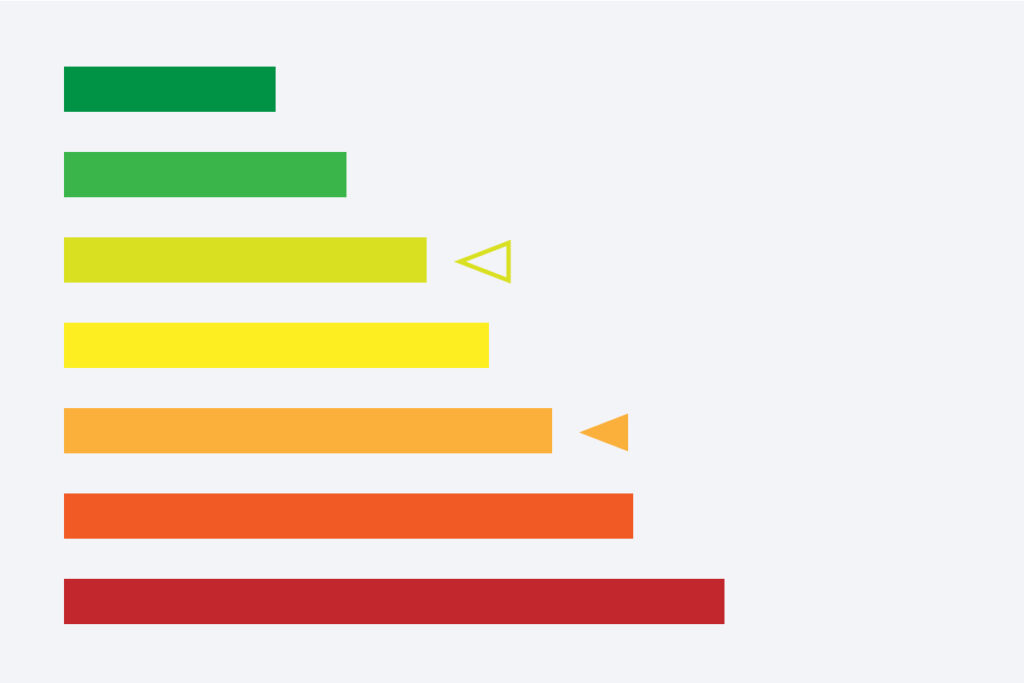

EPCs are a good example of transparency of assessment. An EPC (energy performance certificate) are issued when you move into a property in the UK. They show you how energy efficient it is, as a A-G scale that looks a lot like the ones you get on new appliances. It also gives you the result and one you’d get if you applied the changes they recommend. It’s a pretty neat way of exposing some of the complexity that lies behind the assessment without overwhelming the recipient and encouraging them to progress.

Reflecting on this (and considering how long it took us to put a set of questions together we were happy with) we decided to avoid coming up with a scoring strategy that would distract from the work and published the 72 questions as well as longer-form workshop questions.

I’m sure the team will attempt some kind of weighing eventually and we’ve already labelled questions on a scale of importance (high, medium, low) but trying to make sense of responses is a fool’s errand for now. It might be that colleagues at BBC Bitesize who make quizzes able to contribute a different perspective, but right now it feels too dangerous.

As we know from the last 5 years of ethical challenges online, it’s too easy to let people get away with poor user experience because their product succeeds in other ways. We have to be able to see the whole picture and interrogate companies deeply.